At the recent AAMC Conference on Emerging Technologies for Teaching and Learning, Aquifer’s Dr. Leslie Fall (CEO and Founder), Joseph Miller (Manager of Curriculum and Learning Integration), and Dr. Emily Stewart (Chief Learning Officer) demonstrated how they used AI to solve a vexing problem in medical education.

The Clinical Feedback Challenge

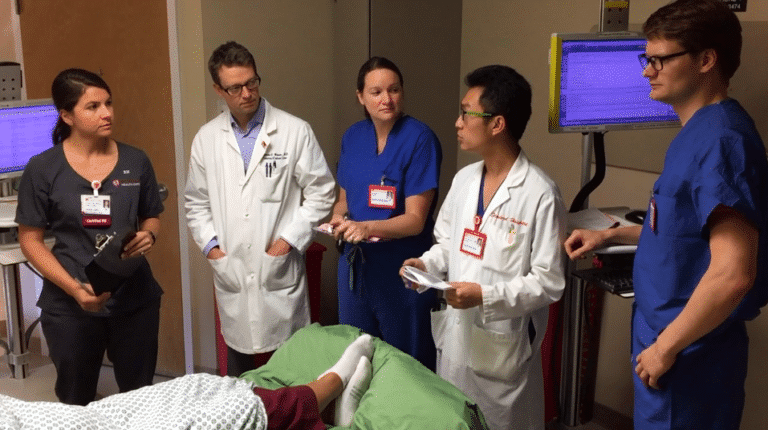

Picture a typical scene in medical education: A student on clinical rounds presents their case to an attending physician and team. Despite best intentions, the moment passes without substantive feedback. The team moves on to the next patient, leaving the student uncertain about their presentation’s effectiveness. Did they skip key details? Did their presentation meet expectations?

This scenario plays out daily across training environments. With diagnostic errors affecting up to 12 million U.S. adults annually and 90% of serious errors stemming from flawed clinical reasoning rather than technical failures, these seemingly brief missed feedback opportunities have significant consequences for patient care.

Summary Statements: A Window into Clinical Reasoning

One of the most revealing indicators of clinical reasoning is the summary statement—a concise, one-to-three-sentence synthesis of key patient information. A well-crafted summary statement reflects how students process and prioritize clinical details, mirroring expert decision-making. For experienced clinicians, this skill is second nature. However, for novice learners, common pitfalls emerge:

- Some students overload their statements with excessive details, hoping the key information will stand out if everything is included.

- Others leave out critical elements, misjudging which findings are most relevant.

- Many struggle with structuring their thoughts, making their reasoning difficult to follow.

Because summary statements provide a window into a student’s clinical thinking, they offer an ideal opportunity for targeted feedback—yet this feedback is often missing or inconsistent.

Aquifer’s AI Solution

To bridge the gap in clinical feedback, Aquifer developed an AI-powered system that evaluates student-submitted summary statements in our virtual patient cases. The system is a digital clinical preceptor, providing structured feedback.

Three key elements drive the AI-generated feedback:

- Preceptor Role Simulation – The AI is designed to respond as an experienced clinician, assessing whether the student’s summary statement captures the most critical aspects of the case.

- Expert-Derived Rubrics – The AI compares the student’s statement against a validated rubric, assessing clarity, completeness, and diagnostic accuracy.

- Exemplar Comparison – The AI references an expert-crafted summary statement for each case, highlighting missing key details, identifying unnecessary elements, and suggesting improvements in clinical reasoning.

When a student submits a summary statement, the AI analyzes it and provides immediate, detailed guidance. If key information isn’t included or if the student’s reasoning is unclear, the feedback directs students toward improvement with specific suggestions. This structured approach ensures that students receive actionable insights within seconds—feedback that would otherwise take a faculty member significant time to provide.

Since launching in June 2023, the system has delivered over 650,000 feedback interactions. The feature has been well-received by students, with 68% responding positively to the AI-generated feedback. One student noted, “Wow, this was a huge eye-opener. I definitely need more exposure to this and will be increasing my study time.”

Medical educators reviewing the system output thought they might be able to provide feedback, but quickly acknowledged that the AI system’s ability to deliver consistent, quality feedback at scale makes it a valuable educational tool. One educator observed that while they might take 15-20 minutes to review and provide feedback on each case, the AI system can deliver detailed guidance in 10-20 seconds.